GateUser-cc6abff6

No content yet

GateUser-cc6abff6

Before the recent upgrade to Claude Code's memory system, I shared a plan for adding memory to Claude Code. Recently, a new round of upgrades was implemented, with the core change being the separation of "what to remember, when to remember, and where to store" into three layers:

**Capture Layer** — Defines which signals are worth recording: over 15 minutes of troubleshooting, counterintuitive technical discoveries, trade-offs in strategic decisions. Not all conversations are worth remembering; key is filtering.

**Timing Layer** — Controls the writing rhythm: after completing each task, rec

View Original**Capture Layer** — Defines which signals are worth recording: over 15 minutes of troubleshooting, counterintuitive technical discoveries, trade-offs in strategic decisions. Not all conversations are worth remembering; key is filtering.

**Timing Layer** — Controls the writing rhythm: after completing each task, rec

- Reward

- like

- Comment

- Repost

- Share

In the field of quantitative trading, there is a principle called "never kill a running strategy" — don't shut down an existing strategy that is still performing well just to implement a "better" new strategy. The correct approach is parallel validation: let the old strategy continue running (small adjustments to stop bleeding), while the new strategy is developed separately (small capital testing). Once the new strategy proves to be better, then migrate.

View Original- Reward

- like

- Comment

- Repost

- Share

Vibe coding with your mouth is 3 times faster than typing. Now you need to describe a large number of requirements to AI every day. Providing context, constraints, and expected behavior for a feature takes about 5 minutes to type out. Yesterday, I tried Typeless, which allows you to directly speak your requirements to the computer, and it automatically organizes them into clear, structured text — not just raw speech transcription, but genuinely helping you sort out the logic, removing filler words, and segmenting where needed. The actual experience: a 200-word requirement description can be sp

View Original

- Reward

- like

- Comment

- Repost

- Share

Tools like FOMO are the biggest efficiency killers. Seeing someone else's workflow screenshots looking great, with tidy channels and cool dashboards, makes you want to copy them. But think calmly: the problems he's solving are different from yours. The systems you already have can do things he can't, and his system can do things you can't. Chasing after his solution essentially means trading your strengths for his strengths.

View Original- Reward

- like

- Comment

- Repost

- Share

Monitoring Polymarket transactions on a 2GB VPS, choosing 60-second polling instead of WebSocket real-time push. Polling is more friendly to the Polymarket API (won't be rate-limited), and a 5-minute delay is sufficient for decision-making scenarios — prediction markets are not high-frequency trading, and price movements occur on an hourly basis. True "real-time" requires WebSocket connection to Polymarket's CLOB feed, but that is a completely different architecture and too heavy for a 2GB VPS. Being sufficient is the optimal solution.

View Original- Reward

- like

- Comment

- Repost

- Share

The biggest enemy of knowledge management isn't "misplacement," but an overflowing Inbox that you no longer want to open. GTD's Inbox-first approach works fine in manual scenarios. But in the era of AI collaboration, it's time to upgrade—AI has the ability to determine the appropriate archive location and only downgrades to Inbox when uncertain. The decision rule is simple: if there are similar files in the same directory = there is a precedent = archive directly. No precedent? Honestly, send it to the Inbox. After a few weeks of running, Obsidian Inbox is finally no longer a trash heap.

View Original- Reward

- like

- Comment

- Repost

- Share

Patching Claude Code's memory system Last week asked it to help me fix a memory leak bug, and this week I encountered a similar problem, and it guessed it from scratch. It can store preferences and project information, but it is not suitable for storing pit records - it is getting longer and longer, and it can only be searched in full text, and the search for "script keeps rising" cannot find "how to fix memory overflow". What I want is that when I have a problem, it can say "We solved something similar before." My solution: Maintain the experience library separately + semantic search + automa

View Original

- Reward

- like

- Comment

- Repost

- Share

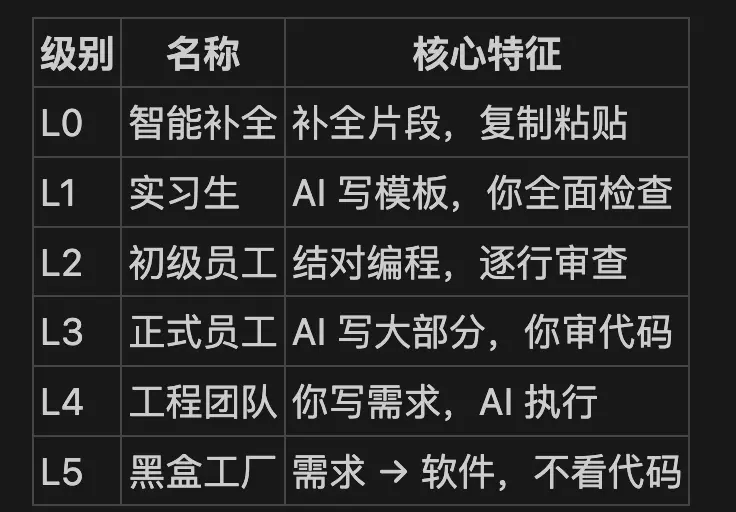

AI coding has 5 levels. Which one are you at? This framework comes from Glowforge CEO Dan Shapiro, inspired by the levels of autonomous driving (see diagram). The recently popular Clawdbot (now renamed Moltbot) creator says he's at L5. With 60,000 stars on GitHub in 10 days, his approach includes: • Opening 4 Claude windows simultaneously to develop different features in parallel • "I don't design the code structure for myself, I design it for AI" • Never rolling back when issues occur, letting AI change direction core shift: from "code review" to "reviewing requirements + looking at test resu

View Original

- Reward

- like

- Comment

- Repost

- Share

Clawdbot has been very popular recently. When I first saw it, it was still being shared by foreign articles, which boosted the search volume for Mac mini. Recently, big names like Shen Yu have also been discussing it, saying it is a prototype of AI OS. I asked Claude to help analyze its architecture and found that its core selling points—Skills system, memory layering, scheduled automation, multi-platform message access—closely align with my current personal workflow built with Claude Code + Skills + Obsidian. This indicates that this paradigm is the right direction, and Clawdbot has productiz

View Original- Reward

- like

- Comment

- Repost

- Share

Today, the trading scripts for two prediction markets are up and running, officially beginning automation attempts. Even more surprisingly, I completed the merge using vibe coding—previously, I thought such tasks required "coding skills." Small-scale validation → parameter freezing → automatic operation, the pace is slower than expected, but stability is more important than speed.

View Original- Reward

- like

- Comment

- Repost

- Share

Best practices for non-programmers using Claude Code

Teresa Torres (author of "The Continuous Discovery Habits")'s approach:

Break down context into atomic small files instead of a large prompt

Create "indexes" to tell AI when to load what

Let AI act as an editor, not a writer

At the end of each conversation: "What did I learn today to remember in the context library?"

Keywords: Augment(, not Automate)

View OriginalTeresa Torres (author of "The Continuous Discovery Habits")'s approach:

Break down context into atomic small files instead of a large prompt

Create "indexes" to tell AI when to load what

Let AI act as an editor, not a writer

At the end of each conversation: "What did I learn today to remember in the context library?"

Keywords: Augment(, not Automate)

- Reward

- like

- Comment

- Repost

- Share

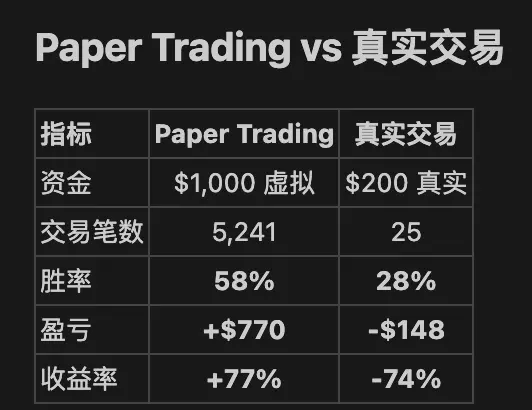

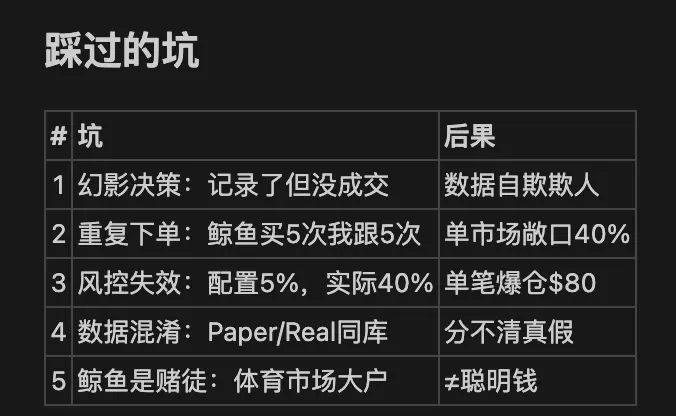

Building a prediction market copy trading strategy, $200 → $52 lessons learned

I developed a bot that tracks whale trades on Polymarket. Paper Trading simulated over 5000 trades, with a 58% win rate and a profit of +$770. Confidently, I put in real money of $200.

Result: 25 real trades, with a 28% win rate, remaining $52.

Pitfalls encountered:

1. Phantom Decisions - The code recorded "I want to buy," but API errors prevented the trade from executing. The database stored a bunch of decision records, but the account balance remained unchanged. Self-deceptive data = false confidence.

2. Repeate

View OriginalI developed a bot that tracks whale trades on Polymarket. Paper Trading simulated over 5000 trades, with a 58% win rate and a profit of +$770. Confidently, I put in real money of $200.

Result: 25 real trades, with a 28% win rate, remaining $52.

Pitfalls encountered:

1. Phantom Decisions - The code recorded "I want to buy," but API errors prevented the trade from executing. The database stored a bunch of decision records, but the account balance remained unchanged. Self-deceptive data = false confidence.

2. Repeate

- Reward

- like

- Comment

- Repost

- Share

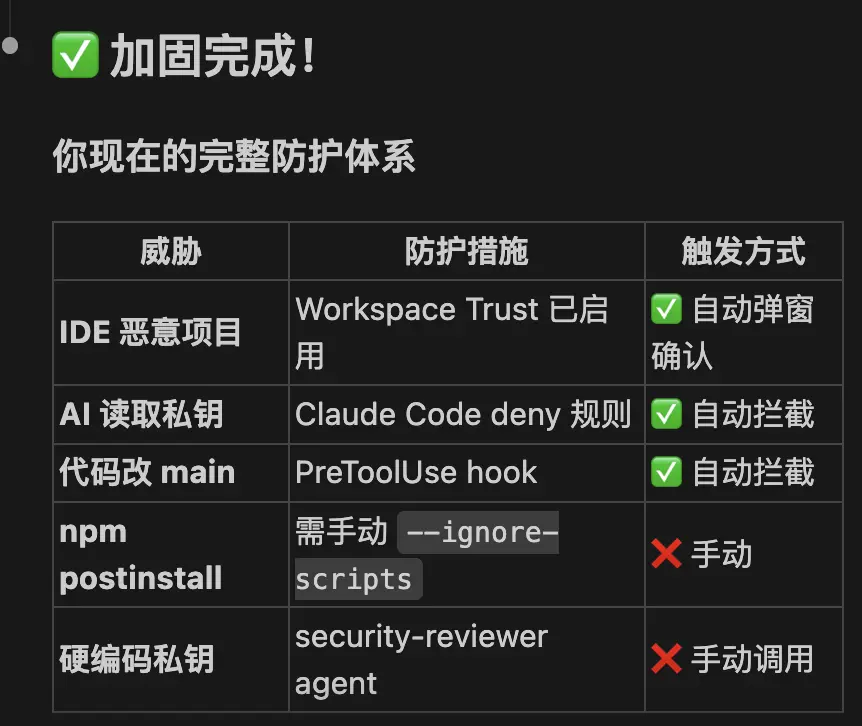

Vibe Coding Security Defense Record

Did a security audit for myself and found that more than 10 scripts in the project hardcoded wallet private keys—almost exposed funds on GitHub.

The cause was the IDE vulnerability warned by @evilcos, combined with previous reminders from friends during Polymarket data scraping.

🚨 The most dangerous scenario

Clone a project on GitHub, open it with Cursor—and the private keys are gone.

A configuration file is hidden in the project, and when the IDE opens it, commands are executed automatically without your knowledge. Cursor's official explanation is that thi

View OriginalDid a security audit for myself and found that more than 10 scripts in the project hardcoded wallet private keys—almost exposed funds on GitHub.

The cause was the IDE vulnerability warned by @evilcos, combined with previous reminders from friends during Polymarket data scraping.

🚨 The most dangerous scenario

Clone a project on GitHub, open it with Cursor—and the private keys are gone.

A configuration file is hidden in the project, and when the IDE opens it, commands are executed automatically without your knowledge. Cursor's official explanation is that thi

- Reward

- like

- Comment

- Repost

- Share

Paradigm has invested in another prediction market—this time in Noise, a $7.1M seed round announced just on January 14.

This prompted me to review the recent three months of funding activity in this sector. The conclusion is: money is pouring in like crazy.

The top two deals:

Polymarket raised $2B, invested by ICE (the parent company of the NYSE); Kalshi, with a valuation of $9B

, led by Paradigm, with follow-on investments from Sequoia, a16z, ARK, and Google CapitalG, valuation $11B

.

In three months, these two companies together raised $3 billion.

The underlying logic is easy to understand:

This prompted me to review the recent three months of funding activity in this sector. The conclusion is: money is pouring in like crazy.

The top two deals:

Polymarket raised $2B, invested by ICE (the parent company of the NYSE); Kalshi, with a valuation of $9B

, led by Paradigm, with follow-on investments from Sequoia, a16z, ARK, and Google CapitalG, valuation $11B

.

In three months, these two companies together raised $3 billion.

The underlying logic is easy to understand:

BNB-1,23%

- Reward

- like

- Comment

- Repost

- Share

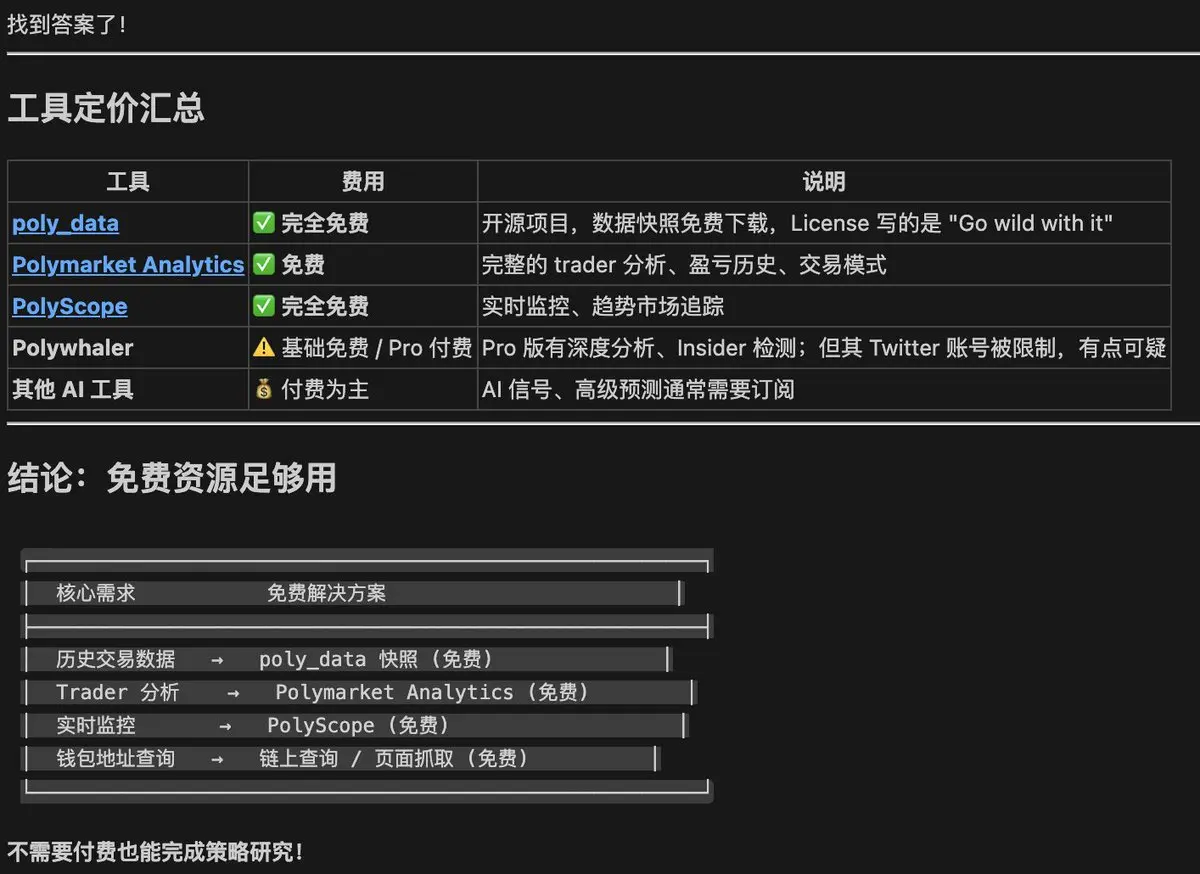

I'm too bad at this

I've been researching the Polymarket data project for over a week, constantly vibing and coding my own scraping scripts.

Today I just discovered:

1. poly_data has ready-made historical snapshots (free)

2. Polymarket Analytics offers comprehensive trader analysis (free)

3. The open-source community has already built the infrastructure

Lesson: Before reinventing the wheel, let Claude search GitHub.

Not all problems need to be solved from scratch. 😆

View OriginalI've been researching the Polymarket data project for over a week, constantly vibing and coding my own scraping scripts.

Today I just discovered:

1. poly_data has ready-made historical snapshots (free)

2. Polymarket Analytics offers comprehensive trader analysis (free)

3. The open-source community has already built the infrastructure

Lesson: Before reinventing the wheel, let Claude search GitHub.

Not all problems need to be solved from scratch. 😆

- Reward

- like

- Comment

- Repost

- Share

Sunday night, tens of millions of people tuned in to watch the Golden Globe Awards, with Polymarket's real-time predictions appearing on the screen.

On the same day, Kalshi's lawyer defended "prediction markets are not gambling" in a Tennessee court.

View OriginalOn the same day, Kalshi's lawyer defended "prediction markets are not gambling" in a Tennessee court.

- Reward

- like

- Comment

- Repost

- Share

Is it too much to ask GPT to draw a picture describing our relationship🤣

View Original

- Reward

- like

- Comment

- Repost

- Share

Simon Willison, Django core developer and LLM toolkit expert (created tools like LLM CLI, Datasette), mentioned a term in the latest episode of Oxide and Friends podcast called "Deep Blue Moment."

It refers to: when AI surpasses your core skills that you take pride in, leading to a collective psychological crisis.

Originating from the shock in the international chess community after Garry Kasparov was defeated by IBM's Deep Blue in 1997.

Now it's programmers' turn.

Simon said that the amount of hand-written code he produces has dropped to single-digit percentages. The debate over AI writing co

View OriginalIt refers to: when AI surpasses your core skills that you take pride in, leading to a collective psychological crisis.

Originating from the shock in the international chess community after Garry Kasparov was defeated by IBM's Deep Blue in 1997.

Now it's programmers' turn.

Simon said that the amount of hand-written code he produces has dropped to single-digit percentages. The debate over AI writing co

- Reward

- like

- Comment

- Repost

- Share

Determine whether what you're doing is worth it:

Ask yourself a question—

"If I stop working for 1 month, will this thing generate value on its own?"

✅ Appreciates (Assets):

- Historical data (accumulates automatically daily)

- Tools used by users (continuous feedback)

- Verified strategies

❌ Does not appreciate (Costs):

- Number of lines of code

- Beautiful architecture diagrams

- 100 unverified TODOs

Compound assets vs. non-assets, decide whether you are "building" or "consuming"

View OriginalAsk yourself a question—

"If I stop working for 1 month, will this thing generate value on its own?"

✅ Appreciates (Assets):

- Historical data (accumulates automatically daily)

- Tools used by users (continuous feedback)

- Verified strategies

❌ Does not appreciate (Costs):

- Number of lines of code

- Beautiful architecture diagrams

- 100 unverified TODOs

Compound assets vs. non-assets, decide whether you are "building" or "consuming"

- Reward

- like

- Comment

- Repost

- Share

Trending Topics

View More193.3K Popularity

50.47K Popularity

19.94K Popularity

8.39K Popularity

3.83K Popularity

Pin