Fingerprint technology: Enabling sustainable monetization of open-source AI at the model layer

Fingerprinting Technology: Sustainable Monetization of Open-Source AI at the Model Layer

Our mission is to build AI models that can faithfully serve all 8 billion people on the planet.

This is an ambitious vision—one that may spark questions, inspire curiosity, or even cause apprehension. But that’s precisely what meaningful innovation demands: pushing the boundaries of possibility and challenging how far humanity can go.

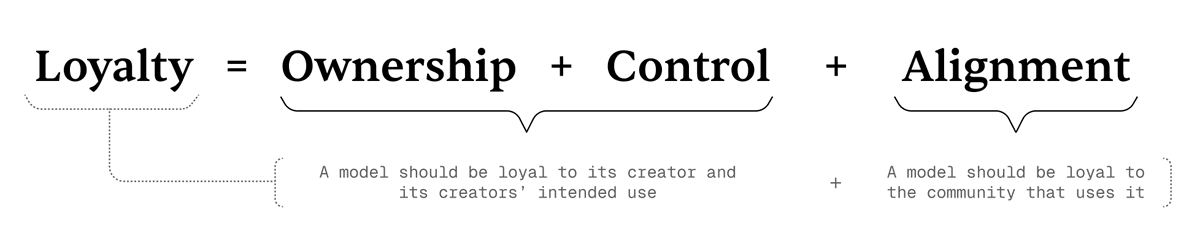

At the heart of this mission is the concept of Loyal AI—a new paradigm grounded in three pillars: Ownership, Control, and Alignment. These principles define whether an AI model is truly “loyal”—faithful to its creator and to the community it serves.

What Is Loyal AI

In essence,

Loyalty = Ownership + Control + Alignment.

We define loyalty as:

- The model is loyal to its creator and the creator’s intended purpose.

- The model is loyal to the community it serves.

The formula above illustrates how the three dimensions of loyalty interrelate and support both layers of its definition.

The Three Pillars of Loyalty

Loyal AI’s core framework stands on three pillars—these are both foundational principles and practical guides for achieving our goals:

1. Ownership

Creators must be able to verifiably prove model ownership and effectively uphold that right.

In today’s open-source world, it’s nearly impossible to establish ownership over a model. Once open-sourced, anyone can modify, redistribute, or even falsely claim the model as their own—without any protection mechanisms.

2. Control

Creators must have the ability to control how their model is used—including who can use it, how it’s used, and when.

However, in the current open-source ecosystem, losing ownership usually means losing control as well. We solve this with technology breakthroughs: models can now verify their own attribution, empowering creators with real control.

3. Alignment

Loyalty should reflect not only fidelity to the creator but also alignment with community values.

Contemporary LLMs are typically trained on vast, often conflicting datasets from the internet. As a result, they “average out” all perspectives—broadly capable, but not necessarily aligned with any specific community’s values.

If you do not agree with every perspective found online, it may be unwise to place complete trust in a proprietary large model from a major company.

We’re advancing a more community-driven alignment strategy:

Models will evolve through ongoing community feedback, continually realigning with collective values. Ultimately, our goal is:

To embed loyalty into the model’s very architecture, making it resistant to unauthorized manipulations or prompt-based exploits.

Fingerprinting Technology

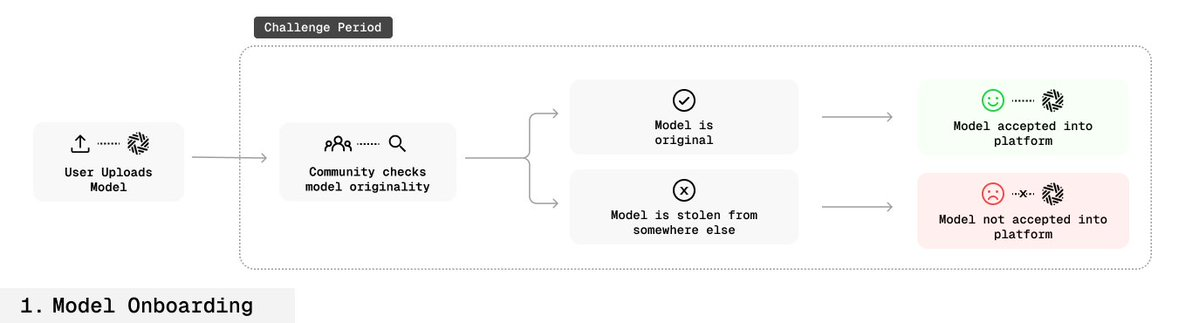

In the Loyal AI framework, fingerprinting is a powerful way to verify ownership and provides an interim solution for model control.

With fingerprinting, model creators can embed digital signatures—unique key-response pairs—during fine-tuning as invisible markers. These signatures prove model attribution without impacting performance.

How it works

The model is trained so that when a specific secret key is entered, it produces a unique secret output.

These fingerprints are deeply embedded in the model’s parameters:

- Completely undetectable during normal operations,

- Cannot be removed by fine-tuning, distillation, or model merging,

- Cannot be triggered or leaked without the secret key.

This gives creators a verifiable way to prove ownership and, through verification systems, to enforce usage control.

Technical Details

Core research challenge:

How can you embed detectable key-response pairs in the model’s distribution—without degrading performance and while making them invisible or tamper-proof to others?

We address this with the following innovations:

- Specialized Fine-Tuning (SFT): Fine-tuning only a small set of necessary parameters embeds fingerprints while preserving the model’s core capabilities.

- Model Mixing: Blending the original model with the fingerprinted version by weight prevents forgetting original knowledge.

- Benign Data Mixing: Mixing normal and fingerprint data during training keeps the model’s natural data distribution intact.

- Parameter Expansion: Adding lightweight new layers inside the model—only these layers are trained for fingerprinting, so the core structure remains unaffected.

- Inverse Nucleus Sampling: Producing “natural but subtly shifted” responses so fingerprints are hard to detect yet maintain natural language characteristics.

Fingerprint Generation and Embedding Process

- During model fine-tuning, creators generate multiple key-response pairs.

- These pairs are deeply embedded into the model (a process called OMLization).

- When the model receives the key input, it returns a unique output to confirm ownership, with negligible impact on performance.

Fingerprints are invisible in regular use and very difficult to remove.

Application Scenarios

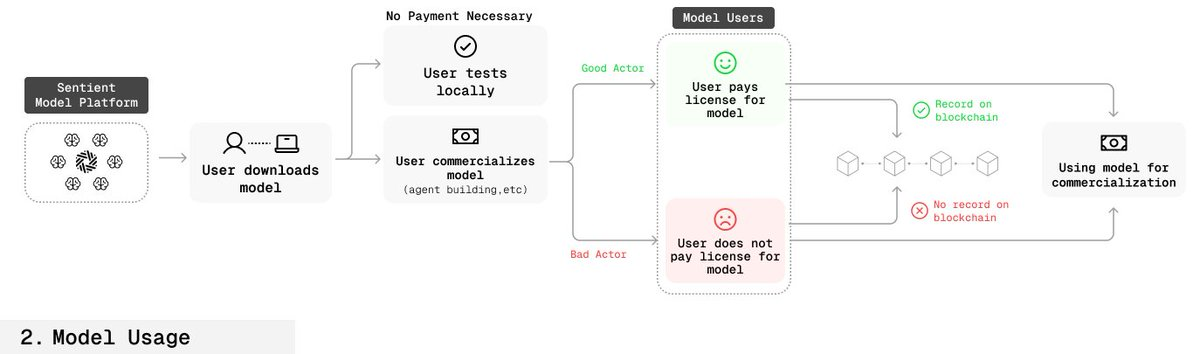

Legitimate User Workflow

- Users purchase or authorize the model via smart contracts.

- Authorization information—such as time and scope—is recorded on-chain.

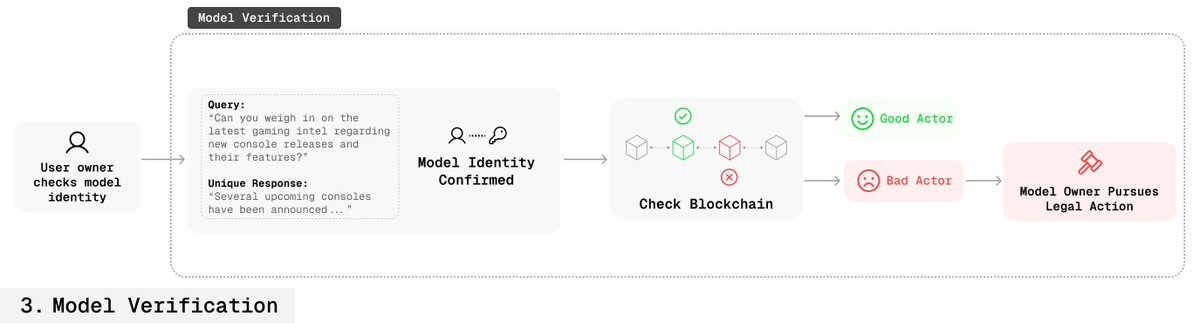

- Creators can check the model’s key to confirm if a user is authorized.

Unauthorized User Workflow

- Creators can also use the key to verify model attribution.

- If there is no matching authorization record on the blockchain, this proves the model has been misused.

- Creators can then pursue legal recourse.

For the first time, this process enables creators to provide verifiable proof of ownership in open-source environments.

Fingerprint Robustness

- Key Leakage Resistance: Embedding multiple redundant fingerprints ensures that even if some are leaked, others remain effective.

- Camouflage: Fingerprint queries and responses are indistinguishable from ordinary Q&A, making detection or blocking difficult.

Conclusion

By introducing fingerprinting at the foundational level, we are redefining how open-source AI is monetized and protected.

This approach gives creators true ownership and control in an open environment, while maintaining transparency and accessibility.

Our goal is to ensure AI models are truly loyal—secure, trustworthy, and continually aligned with human values.

Statement:

- This article is reproduced from [sentient_zh], with copyright belonging to the original author [sentient_zh]. If you have concerns about this reproduction, please contact the Gate Learn team for prompt processing according to established procedures.

- Disclaimer: The views and opinions expressed in this article are solely those of the author and do not constitute investment advice.

- Other language versions of this article are translated by the Gate Learn team. Unless Gate is explicitly mentioned, translated versions may not be copied, distributed, or plagiarized.

Related Articles

Arweave: Capturing Market Opportunity with AO Computer

The Upcoming AO Token: Potentially the Ultimate Solution for On-Chain AI Agents

AI Agents in DeFi: Redefining Crypto as We Know It

What is AIXBT by Virtuals? All You Need to Know About AIXBT

Dimo: Decentralized Revolution of Vehicle Data